1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

| [GIN] 2025/02/03 - 22:02:45 | 200 | 0s | 127.0.0.1 | HEAD "/"

[GIN] 2025/02/03 - 22:02:45 | 200 | 54.7458ms | 127.0.0.1 | POST "/api/show"

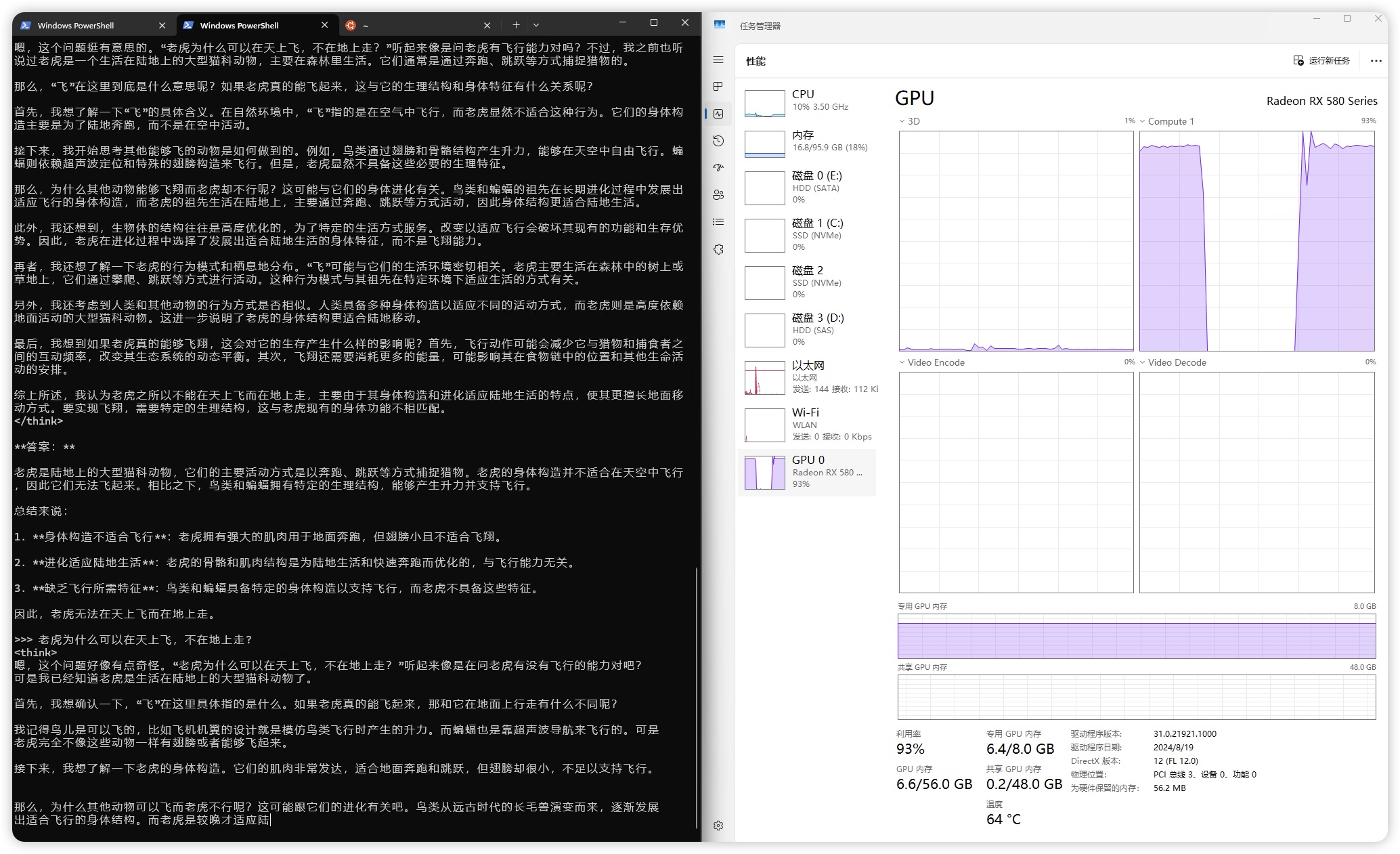

time=2025-02-03T22:02:46.336+08:00 level=INFO source=sched.go:185 msg="one or more GPUs detected that are unable to accurately report free memory - disabling default concurrency"

time=2025-02-03T22:02:46.379+08:00 level=INFO source=sched.go:714 msg="new model will fit in available VRAM in single GPU, loading" model=C:\Users\lewlh\.ollama\models\blobs\sha256-96c415656d377afbff962f6cdb2394ab092ccbcbaab4b82525bc4ca800fe8a49 gpu=0 parallel=4 available=8455716864 required="5.6 GiB"

time=2025-02-03T22:02:46.930+08:00 level=INFO source=server.go:104 msg="system memory" total="95.9 GiB" free="83.9 GiB" free_swap="106.4 GiB"

time=2025-02-03T22:02:46.931+08:00 level=INFO source=memory.go:356 msg="offload to rocm" layers.requested=-1 layers.model=29 layers.offload=29 layers.split="" memory.available="[7.9 GiB]" memory.gpu_overhead="0 B" memory.required.full="5.6 GiB" memory.required.partial="5.6 GiB" memory.required.kv="448.0 MiB" memory.required.allocations="[5.6 GiB]" memory.weights.total="4.1 GiB" memory.weights.repeating="3.7 GiB" memory.weights.nonrepeating="426.4 MiB" memory.graph.full="478.0 MiB" memory.graph.partial="730.4 MiB"

time=2025-02-03T22:02:46.947+08:00 level=INFO source=server.go:376 msg="starting llama server" cmd="C:\\Users\\lewlh\\AppData\\Local\\Programs\\Ollama\\lib\\ollama\\runners\\rocm_avx\\ollama_llama_server.exe runner --model C:\\Users\\lewlh\\.ollama\\models\\blobs\\sha256-96c415656d377afbff962f6cdb2394ab092ccbcbaab4b82525bc4ca800fe8a49 --ctx-size 8192 --batch-size 512 --n-gpu-layers 29 --threads 10 --parallel 4 --port 65200"

time=2025-02-03T22:02:47.241+08:00 level=INFO source=sched.go:449 msg="loaded runners" count=1

time=2025-02-03T22:02:47.241+08:00 level=INFO source=server.go:555 msg="waiting for llama runner to start responding"

time=2025-02-03T22:02:47.242+08:00 level=INFO source=server.go:589 msg="waiting for server to become available" status="llm server error"

time=2025-02-03T22:02:47.290+08:00 level=INFO source=runner.go:938 msg="starting go runner"

ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no

ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no

ggml_cuda_init: found 1 ROCm devices:

Device 0: Radeon RX 580 Series, compute capability 8.0, VMM: no

time=2025-02-03T22:02:48.293+08:00 level=INFO source=runner.go:939 msg=system info="ROCm : NO_PEER_COPY = 1 | PEER_MAX_BATCH_SIZE = 128 | CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | LLAMAFILE = 1 | AARCH64_REPACK = 1 | cgo(gcc)" threads=10

llama_load_model_from_file: using device ROCm0 (Radeon RX 580 Series) - 8064 MiB free

time=2025-02-03T22:02:48.294+08:00 level=INFO source=.:0 msg="Server listening on 127.0.0.1:65200"

llama_model_loader: loaded meta data with 26 key-value pairs and 339 tensors from C:\Users\lewlh\.ollama\models\blobs\sha256-96c415656d377afbff962f6cdb2394ab092ccbcbaab4b82525bc4ca800fe8a49 (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen2

llama_model_loader: - kv 1: general.type str = model

llama_model_loader: - kv 2: general.name str = DeepSeek R1 Distill Qwen 7B

llama_model_loader: - kv 3: general.basename str = DeepSeek-R1-Distill-Qwen

llama_model_loader: - kv 4: general.size_label str = 7B

llama_model_loader: - kv 5: qwen2.block_count u32 = 28

llama_model_loader: - kv 6: qwen2.context_length u32 = 131072

llama_model_loader: - kv 7: qwen2.embedding_length u32 = 3584

llama_model_loader: - kv 8: qwen2.feed_forward_length u32 = 18944

llama_model_loader: - kv 9: qwen2.attention.head_count u32 = 28

llama_model_loader: - kv 10: qwen2.attention.head_count_kv u32 = 4

llama_model_loader: - kv 11: qwen2.rope.freq_base f32 = 10000.000000

llama_model_loader: - kv 12: qwen2.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 13: general.file_type u32 = 15

llama_model_loader: - kv 14: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 15: tokenizer.ggml.pre str = qwen2

llama_model_loader: - kv 16: tokenizer.ggml.tokens arr[str,152064] = ["!", "\"", "

llama_model_loader: - kv 17: tokenizer.ggml.token_type arr[i32,152064] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 18: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 19: tokenizer.ggml.bos_token_id u32 = 151646

llama_model_loader: - kv 20: tokenizer.ggml.eos_token_id u32 = 151643

llama_model_loader: - kv 21: tokenizer.ggml.padding_token_id u32 = 151643

llama_model_loader: - kv 22: tokenizer.ggml.add_bos_token bool = true

llama_model_loader: - kv 23: tokenizer.ggml.add_eos_token bool = false

llama_model_loader: - kv 24: tokenizer.chat_template str = {% if not add_generation_prompt is de...

llama_model_loader: - kv 25: general.quantization_version u32 = 2

llama_model_loader: - type f32: 141 tensors

llama_model_loader: - type q4_K: 169 tensors

llama_model_loader: - type q6_K: 29 tensors

time=2025-02-03T22:02:48.497+08:00 level=INFO source=server.go:589 msg="waiting for server to become available" status="llm server loading model"

llm_load_vocab: special_eos_id is not in special_eog_ids - the tokenizer config may be incorrect

llm_load_vocab: special tokens cache size = 22

llm_load_vocab: token to piece cache size = 0.9310 MB

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen2

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 152064

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: vocab_only = 0

llm_load_print_meta: n_ctx_train = 131072

llm_load_print_meta: n_embd = 3584

llm_load_print_meta: n_layer = 28

llm_load_print_meta: n_head = 28

llm_load_print_meta: n_head_kv = 4

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_swa = 0

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 7

llm_load_print_meta: n_embd_k_gqa = 512

llm_load_print_meta: n_embd_v_gqa = 512

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: f_logit_scale = 0.0e+00

llm_load_print_meta: n_ff = 18944

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: causal attn = 1

llm_load_print_meta: pooling type = 0

llm_load_print_meta: rope type = 2

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_ctx_orig_yarn = 131072

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: ssm_d_conv = 0

llm_load_print_meta: ssm_d_inner = 0

llm_load_print_meta: ssm_d_state = 0

llm_load_print_meta: ssm_dt_rank = 0

llm_load_print_meta: ssm_dt_b_c_rms = 0

llm_load_print_meta: model type = 7B

llm_load_print_meta: model ftype = Q4_K - Medium

llm_load_print_meta: model params = 7.62 B

llm_load_print_meta: model size = 4.36 GiB (4.91 BPW)

llm_load_print_meta: general.name = DeepSeek R1 Distill Qwen 7B

llm_load_print_meta: BOS token = 151646 '<|begin▁of▁sentence|>'

llm_load_print_meta: EOS token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: EOT token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: PAD token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_print_meta: FIM PRE token = 151659 '<|fim_prefix|>'

llm_load_print_meta: FIM SUF token = 151661 '<|fim_suffix|>'

llm_load_print_meta: FIM MID token = 151660 '<|fim_middle|>'

llm_load_print_meta: FIM PAD token = 151662 '<|fim_pad|>'

llm_load_print_meta: FIM REP token = 151663 '<|repo_name|>'

llm_load_print_meta: FIM SEP token = 151664 '<|file_sep|>'

llm_load_print_meta: EOG token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: EOG token = 151662 '<|fim_pad|>'

llm_load_print_meta: EOG token = 151663 '<|repo_name|>'

llm_load_print_meta: EOG token = 151664 '<|file_sep|>'

llm_load_print_meta: max token length = 256

llm_load_tensors: offloading 28 repeating layers to GPU

llm_load_tensors: offloading output layer to GPU

llm_load_tensors: offloaded 29/29 layers to GPU

llm_load_tensors: CPU_Mapped model buffer size = 292.36 MiB

llm_load_tensors: ROCm0 model buffer size = 4168.09 MiB

llama_new_context_with_model: n_seq_max = 4

llama_new_context_with_model: n_ctx = 8192

llama_new_context_with_model: n_ctx_per_seq = 2048

llama_new_context_with_model: n_batch = 2048

llama_new_context_with_model: n_ubatch = 512

llama_new_context_with_model: flash_attn = 0

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_new_context_with_model: n_ctx_per_seq (2048) < n_ctx_train (131072) -- the full capacity of the model will not be utilized

llama_kv_cache_init: ROCm0 KV buffer size = 448.00 MiB

llama_new_context_with_model: KV self size = 448.00 MiB, K (f16): 224.00 MiB, V (f16): 224.00 MiB

ggml_cuda_host_malloc: failed to allocate 2.38 MiB of pinned memory: out of memory

llama_new_context_with_model: CPU output buffer size = 2.38 MiB

ggml_cuda_host_malloc: failed to allocate 23.01 MiB of pinned memory: out of memory

llama_new_context_with_model: ROCm0 compute buffer size = 492.00 MiB

llama_new_context_with_model: ROCm_Host compute buffer size = 23.01 MiB

llama_new_context_with_model: graph nodes = 986

llama_new_context_with_model: graph splits = 2

time=2025-02-03T22:02:53.003+08:00 level=INFO source=server.go:594 msg="llama runner started in 5.76 seconds"

[GIN] 2025/02/03 - 22:02:53 | 200 | 7.268022s | 127.0.0.1 | POST "/api/generate"

|